This post is from my report written during internship for the education software test engineering.

I got an insight in the work of a test lead, how an organization of this size do their test planning, test strategies and handles issues.

My internship was not a clear path with focus on one project and how to

work on that in the best way. I did three different projects, first one about technical testing, an analysis of the system architecture. Which tools are used, which areas do the test lead have a vision to improve and what can and cannot be done.

In a company that are in the start of implementing accessibility to their product, I got to use my knowledge of the topic in a real work project. To write a checklist adjusted for this specific company and the test engineers’ specific routines and their level of knowledge was a challenge where I learned more how to improve my test planning for future projects.

We have during the education had courses and lectures about various areas of software testing, strategies, planning, bug reporting. In this report I reflect over the difference from theory to practice. What I learned most at this internship is to connect knowledge and skills to correct task.

Technical Test Analysis

Degree project

The plan was that I would write my degree project during the internship and the base concept was to investigate best practice and how to set up non-functional testing within microservices. An experiential case study of this specific area of testing. During the time I was analyzing their system architecture, test architecture I also talked with different people within the organization. This was test engineers, developers, tech leads and their perspective and opinions of what was of importance in the terms of testing. With these facts and the change of internship project plans I started to lean towards changing the main approach for

the degree project. I realized that the problem areas with working agile with cloud-based microservices are far more complex than just the area I had in mind at first.

So, I kept the main question but changed the approach to include: What are the problems within software testing when working agile with cloud-based microservices?

What could be a best practice approach to reach most effective testability, traceability, observability for a microservice environment? And what are the impressions of the priority of testing microservices/container applications among test leads?

The thesis is written in parallel with the internship and this gave me an opportunity to look closer into areas I might not been able to otherwise. I will not go into the technical details or findings about the research, then I recommend reading my finished degree project instead.

Below follow some of the areas I investigated during the internship and our technical test analysis.

Contract Testing

First task was started with the idea to research what was needed for contract testing.

There was a developer who had made research on the topic and the test team had been talking about implement it. It was basically just to jump in and get started researching the topic since Contract testing is not something I have done before. I did know about the concept and its basics but not much more. This developer had made a presentation of the work that I looked at, it was directed towards Provider-driven Contract testing and focus on backend. I looked closer into Consumer-driven contract testing. The reason for this was three things: It seemed interesting, and I could see the benefits on focusing on testing from a user’s perspective.

Secondly that the company´s product is mobile applications on android and iOS, these are the priority since their website do not have an option to listen/read the books. The third reason was the fact that the test lead mentioned the problem with end-to-end test and flaky results. With consumer-driven testing we can both monitor and prevent issues on low-level before it reaches the clients. We test integration points in isolation to ensure the services works together before deployment. I built a small kotlin application just to research this further,

more so for my degree project to be able to analyze the coverage we can reach within microservices. I would say that this will probably take some time to implement in a complex system with containers, microservices etc. But the coverage and reliability would be worth it, could save the time it takes to write full integrated test (end-to-end) and avoid mentioned problems.

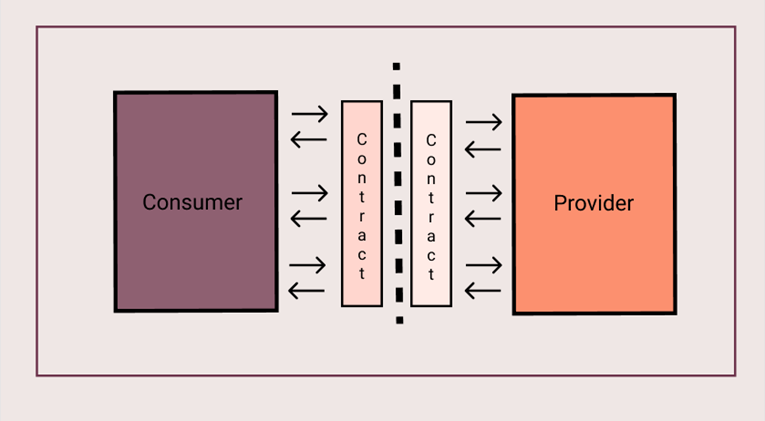

Here we can see the concept of contract testing:

Consumer test the behavior against provider test doubles, the test doubles are written in a contract. The request sent is replayed against provider API and verified against consumer expectations written in its contract. The contracts should be shared amongst teams, so the teams know provider/consumer expectations and makes collaboration and this type of testing possible.

We had a meeting with the developer mentioned above and during that meeting and after we came upon problems to continue this project. Both the fact that contract testing, both consumer/provider needs to involve communication between teams, access to code and planning of which areas that are critical to involve in the testing. We concluded that it would be too big of a project to do during the time for my internship, still something that would be useful and make an improvement.

Synthetic monitoring

During the discussions mentioned in previous section synthetic monitoring was a topic mentioned and we decided that I should investigate it. There was a tool set up called Blazemeter/Runscope which was set up with the thought for the teams to do API testing and synthetic monitoring. Almost no team was using it though, and I went on to figure out why and what was used instead. Here I came across different platforms, Postman, Blazemeter, GitHub, Slack, New Relic and GCP. With agile teams and they decide within the team what tools to use it was at times difficult to find the right person to ask questions about this, or to

find the person who could give access to a tool/software.

Tool Sprawl

Here I analyzed the reasons of why some specific tools was used by some teams. All different tools used and what limits they had. Here I went thru lots of documentation about different tools and software, both the ones used and the ones that could be integrated. From the answers about the reason for tool sprawl was the fact that some tools couldn´t do what the team needed or that they already used another when some of the other was implemented.

For example, GCP, includes a lot of services for almost every area needed in cloud-based microservices/container development and testing. But after researching the PaaS closer there is no possibility to set up synthetic monitoring, not within GCP, not with third-party software.

There are also limitations in their monitoring service when it comes to customized

dashboards.

Monitoring, Tracing, Observing

To be able to monitor the system performance, both high and low-level. In combination with smooth traceability. Today there are a lot of log files but no structure or documentation that the testers can use to work effectively with the data. With all these different topics collected during the research I started to look if there was any tool that had all these included. If it could be a way to minimize tool sprawl, erase flaky tests, make the test engineers have a better overview over various parts and of the full system. There was one tool that already had

been used within the company, I say had since it was no longer actively used. New Relic, here I looked thru their documentation, looked at all their courses to get a deeper understanding. I did not have access to the tool, so all this was done while waiting to find the right person responsible. New Relic includes diverse ways to set up the integration between the tool and main platform, like GCP.

They have experimental courses on their website that I went thru and built a tutorial app to try out different features, some things were: build custom dashboards with NRQL (new relics SQL), measuring performance, alert different critical conditions with Alert Quality Management, how to trace alerts, and explore data with full-stack observability.

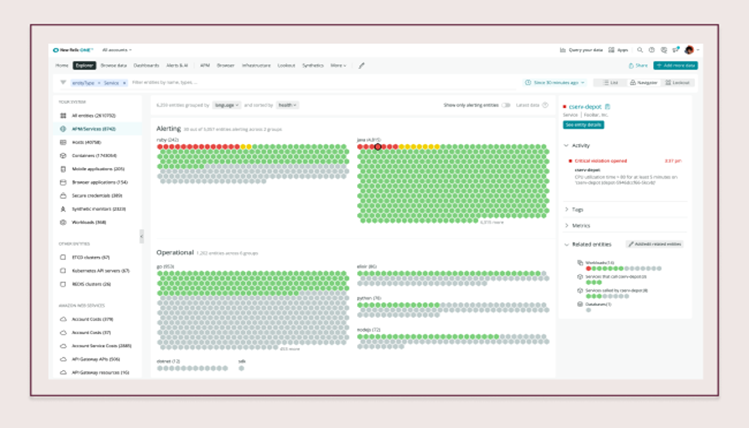

Here is an example from their website:

It is their navigator, here we can filter views by entities and see the full system health. The colors make it easier to find issues. There are several other features in this tool that makes it interesting and useful when it comes to collect data at one place.

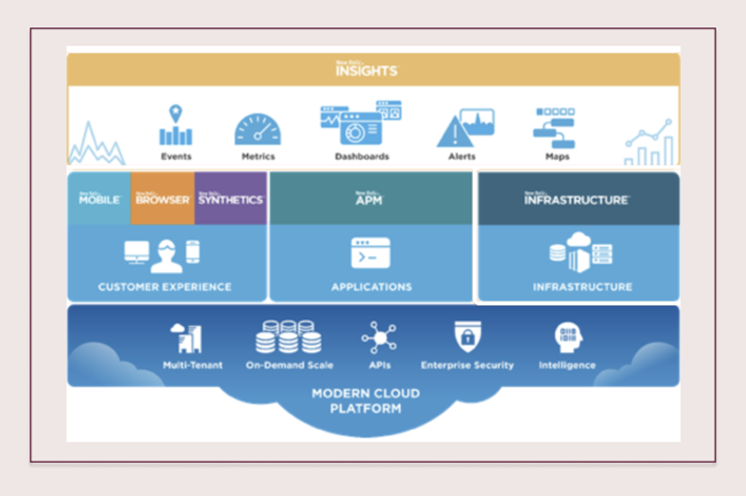

This diagram shows the infrastructure:

After gone thru the documentation and investigated how the company had integrated New Relic with GCP. This led to the same problem as before that it was integrated thru the code, which means that I could not do any set up of monitoring dashboards that was in the test leads vision for the future testing structure. It would not be enough time to learn both the way to integrate and to set up the boards. We also had a discussion with the people responsible for it, and they had just started to organize a way to set up new relic in a way that could benefit the teams. So again, we concluded that the project idea was not possible. But I learned a lot from this, new monitoring techniques I was not familiar with before. The structure and how to work with the features in GCP, I have taken some courses about GCP earlier from my own interest in the topic, but here I was able to access and research it closer in a full working set up. With my earlier experience working with Azure, it was interesting to be able to compare the differences and see pros and cons about both. And it gave me a new area to research and learn more about. This is a topic I will continue studying.

Accessibility

I have a specialized knowledge and large interest in digital accessibility and the company have just started to educate the employees on this topic. They are working towards making their products accessible and approve of the EN 301 549 European accessibility law.

One main problem with WCAG and EN 301 549 is that much of the guidelines are directed towards web, not mobile. After all the current guidelines was written in 2008, smartphones barely existed back then. The test team talked about this in their meetings and that they sometimes don´t know where to start and how to translate the success criteria to mobile. Therefor me and the test lead talked about it and decided that I would write a checklist, focused on mobile and minimalized to not become too overwhelming to use as a start.

The first thing I did here was a one-page checklist, where I made 12 categories:

- Color/Contrast

- Context changes / Dynamic Content

- Custom controls

- Form Fields and Labels

- Form Errors

- Images

- Keyboard and Touchscreen Navigation

- Links & Buttons

- Readability

- Semantics and Structure

- Tables

- Timing

Then just the basics of what each category includes, it is kept simple to be easy to use.

The material available at the company was material in 100+ pages in PowerPoint format. So, my next thing was to collect this material, connect it with WCAG success criteria, and then connect that to the correct section in the EN 301 549, and add a section how to test. Even for me who have good knowledge on this topic and the details it is a challenge to write it. And something I never done before is to see this from the perspective of testing, and even less mobile only. I learned a lot of new things writing this and got a deeper understanding for the testing.

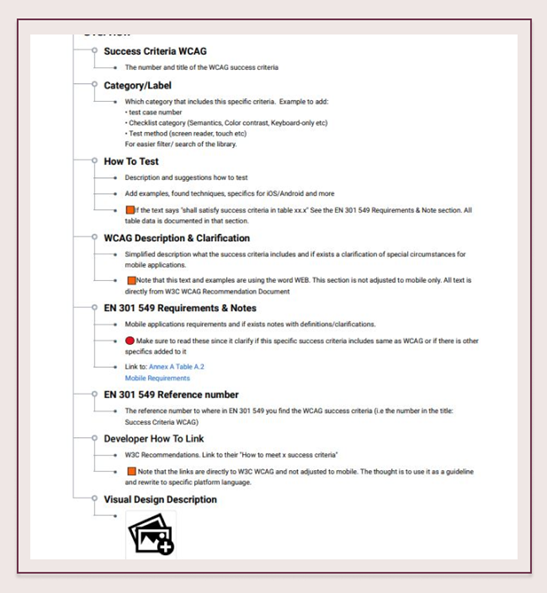

This project is still not completely done, I wish I could have finished it before the internship was over, but the time was too short. Most things are done, all success criteria and categories seen in picture below are done, except no images are added. I also been in contact with the UX-designer, and they are working on similar project and hopefully this is something they can use in the future and add images for even more clarity. Here is a photo of the Overview document, I made this document so it will be easier to understand where to find all information in the main Accessibility Reference Library:

The documents were written in a program called Delibr, never used it before but Storytel uses it and to avoid using a new tool I decided to go for this one. Also, since it is not possible to create foldable sections in google docs, this was the best option to keep the large document usable. I am working on a similar reference library for this site, will post it when I have enough time for it.

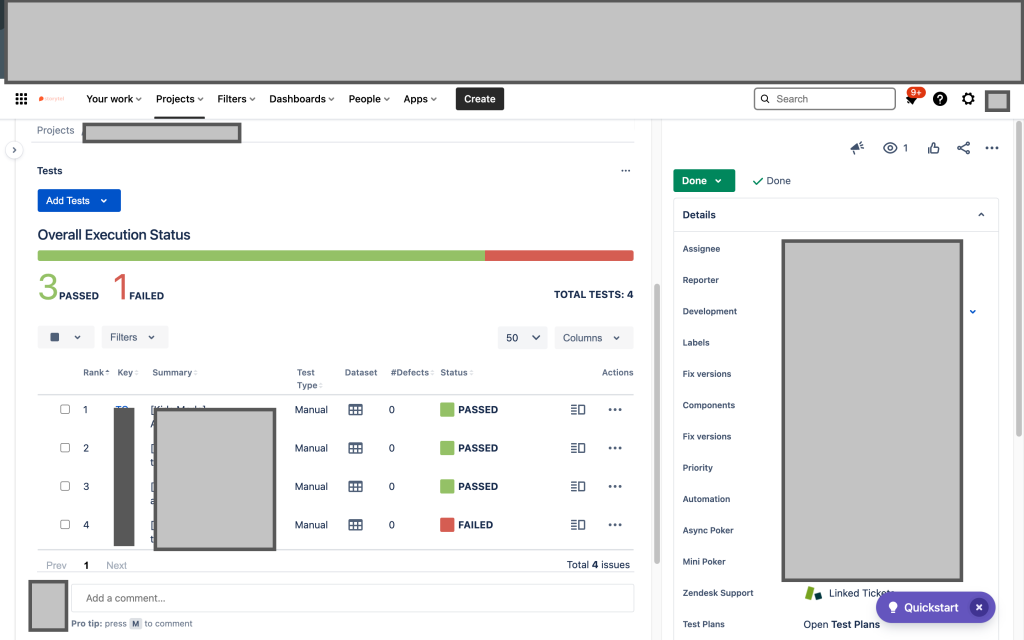

App release

To get started with the app testing I got some test cases added to start working on. This is done in Jira; I do know a bit about Jira since before but never worked in it for real projects. So, even if I felt a bit lost at first or maybe mostly nervous to not do anything wrong that could cause problems, this was interesting and informative. I did test of both the android app and iOS on iPad. All this process was new experience, to set up TestFlight for iOS and internal testing for android. I have done app testing on android before, both others and my own but that has been connected to the computer, not internal test. To see the full process of testing:

- From test board

- in Jira you have the option to only see the test cases signed to you.

- Start the executing and do step by step test

- Some included test different feature with SQL in database.

- If an issue is found: First read the expected results to see if this is already known but not a priority for now.

- Look thru the reported issues and either connect a bug to already existing issue or

- create a new bug report.

Follow it all in different tools, Jira, GCP, Big Query, email, Slack. During this time, I had impressive help from some test engineers, they took their time to help when needed and answered questions along the way. Their work from anywhere approach, which means everyone is working remote (at least now in time of covid) do not impact their communication. People are friendly, helpful, and structured in their work. This experience is something I value high, it shows that people are professional, care about their work and their colleagues. Some of the issues I found, (which was not that many I must say) was then assigned by developers to solve. One of those issues was still ongoing when my last day was coming up, I had been following the process and the discussion around it and felt a bit like not reading the end of a book, you want to read the rest. It is interesting to see the process happening and how testers/developers and developers in different teams communicate and work together with it.

The full internship report can be read here: Link to InternshipReport