The goal with this project was to transfer an old database and include the data in a new existing one.

The company have a product that is used as a platform for online courses. This

product has been rebuilt a few years back but one of the class categories: Electricity educations has never been moved over to the new platform with the new database.

Now they are in a new phase of improving workflow and wanted to get this done.

The goal for my part of this was of course to learn more coding and to work with a bigger project. Another part of my internship was to learn more how to structure work and estimate workflow and time. This was done by using Azure and our daily standup meetings.

Project Planning

The first thing we did was to have a meeting with the management team of the

company. They went thru the requirements for the finished product. This included to get all data from the old database that was important to keep. This included clients, history of purchases, all user information and more. The new database doesn´t included as much data as the old one so it was a bit unclear how much and which data that was needed. We concluded that the first step would be to transfer and make access to the same table columns that are in the new one.

Since the new program they have also has a well worked API, we decided to make an API-client and transfer everything over that.

We also set up requirements for the entire project and what I was needed to do during these months. This included sprint planning and to work on time estimating, we highlighted the importance of follow scrum and be included in the daily stand-up, to get that time to report if there was any specific problems during the process. But also to have separate meetings to follow up where more support and code reviews was needed.

Most of the software and programs used: Visual studio, VS Code, SSMS, GitHub, Azure, .NET.

The focus now was to install the different databases, some new NuGet-packages and get started.

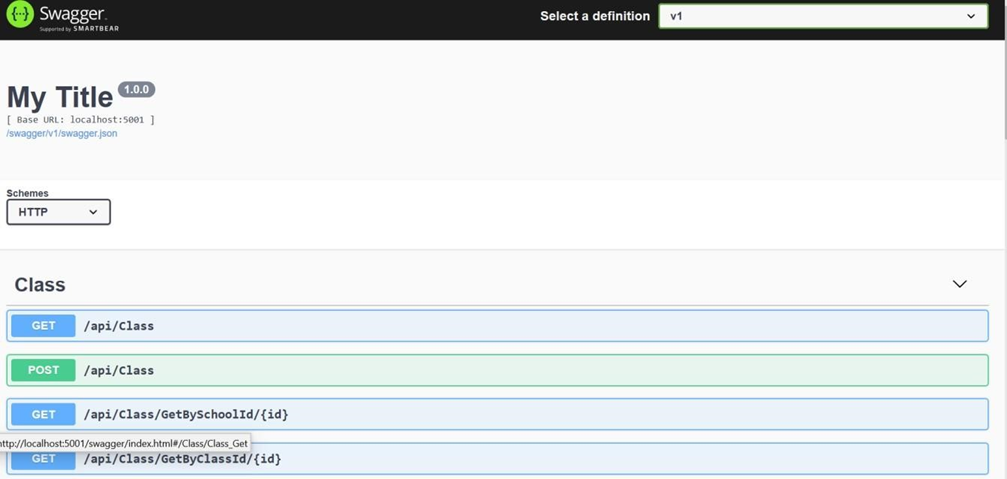

Swagger

The first thing was to figure out the parameters for the API-calls. Here I used

Swagger since it was included in the API-client. Swagger UI automatically generates a visual documentation of all API resources available.

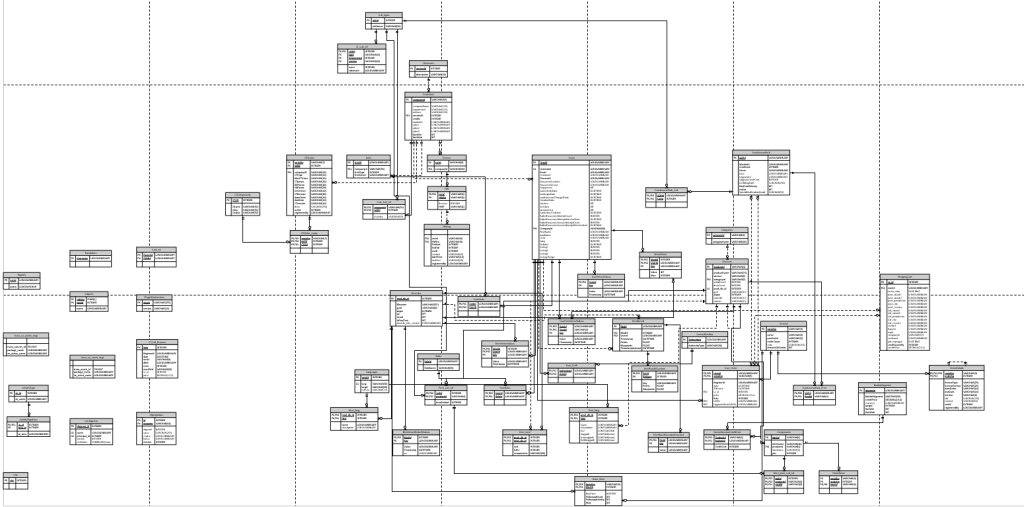

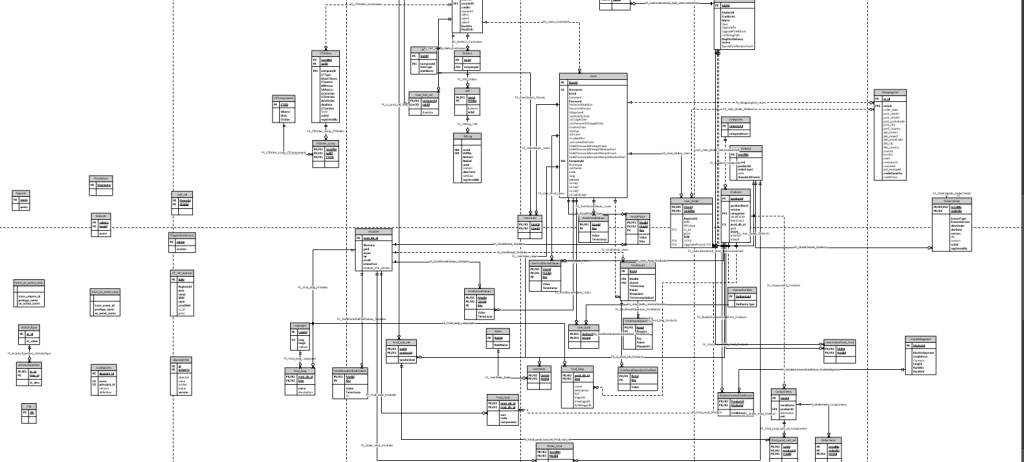

From here I had to sort out which tables was connected and needed to be involved in the project. The end-product for this is quite large and involves lots of various categories and areas, for me who wasn´t familiar with the actual product this was a bit of work before finding a good overview of everything.

There were times where I got stuck and couldn´t get any clear information from the other developers. Some don´t know the old database at all, and some was too busy to take time looking at it. I got the information that not everything was needed and that there was another database which held the privacy parts of the data. No information about this was said during the meeting and planning so I did not know this at first and therefore some things felt much more complex and nested than it had to be. The result I put into a Visio data table to have as a reference when looking thru the old database to find the similar tables.

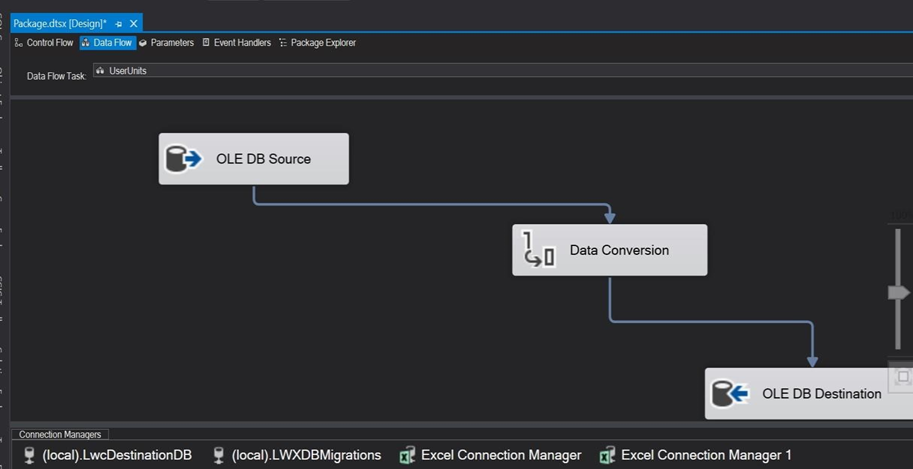

SSIS, SQL Server Integration Service

The old database is different from the new one, as I mentioned above it includes more data, also have a different many to many relationship structures. To make it a bit easier to maintain the data my supervisor gave me the advice to try out SSIS, to use that tool to move needed data to a temporary database and from there use in the project. The first thing was to install the NuGet package in Visual Studio and then I had to read upon how to use it. SSIS is not something we have used before, and it was interesting to learn a new feature and way to work.

SSIS stands for SQL Server Integration Service and is exactly that. A component of SQL server that can execute data migration tasks. There are more features and useful tools included but for this project it was that part I needed. The tables I needed was moved from the old database into a temporary one. This made it faster to work with, not only the overview, but also to restore the data. When debugging you change the database over and over, to save time it should be easy to reset the database after it has been changed.

Code to connect

For this project I could use whatever framework I wanted. There were no other rules more than what mentioned above for how to structure the code. The other projects the company has are mostly written in C#, .NET Framework or React. This is also what we have used in the education. I decided to use .NET core, for a couple of various reasons: Familiarity, easy to work with and that this gave me the opportunity to raise my knowledge further. In school we mostly learned the basics of .NET core, here it was more complex code that had to be used. Many features I wasn´t familiar with at start. The project could be viewed in a couple of different steps, from the analysis of API requirements, from there pick the tables needed to get the key features. Then to get these from old database, to build the POST requests, and then finally connect it all.

The project was built as a Console Application. This made it easy to write results directly in the console while debugging.

Old Database

The first step to complete in code was to get the data from the old database. Here I used what I would say is what we have used the most during the education. Simply basic C# and call the table data thru a connection string. Here I didn´t have to use any advanced code, joins or many to many relationships. It was the part of the project that took least time to build.

New Database

To be able to transfer over the data thru the API we need to make POST requests. These requests need to include the right Json, data and structure. The end parameters were the ones I got thru Swagger, then I took the same classes as the new project had, this to make sure the Json followed the same structure. At first, I debugged the POST requests with fake data. This is easier, then we know that all parameters are correct, and data can be posted and connected to the right table in the new database. This is something I felt that we hadn´t got enough information about in our education. I looked thru our old school projects and could only find one project we made who included an API consumer, and that was not written asynchronous. We have been developing APIs and how to build the server side, but most has been tested thru Postman. Which means that the request code itself doesn´t need to be built. For this first step of building with mock data it felt ok, even if I started to feel stressed over the actual time it took.

POST the data to the new database

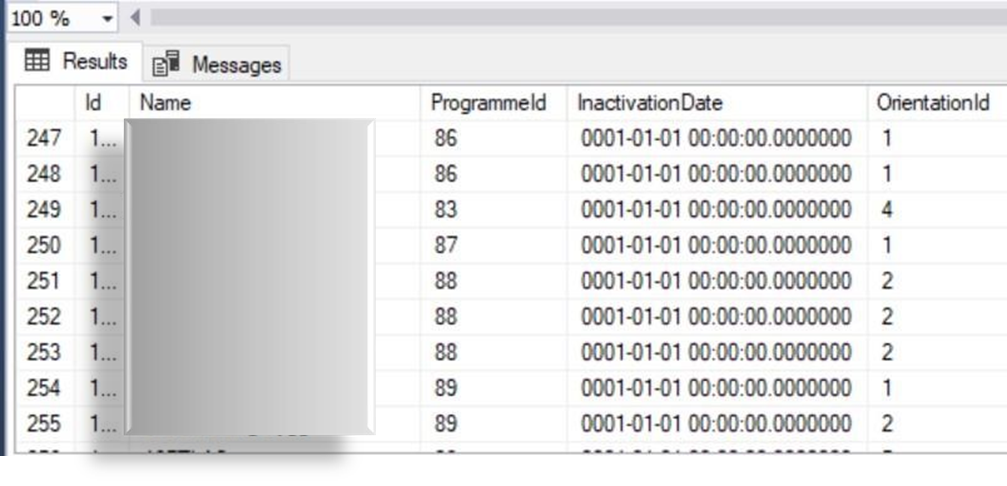

When the GET and POST requests was done and confirmed to be working it was time to connect the databases and do the transfers. All code here was written asynchronous. This means that task is being awaited to finish before next step. This way of coding is pretty much needed while working with this large amount of data. Here I also had to include many to many and many to one relationship to be able to connect the different tables with their right Ids.

Like this table above, it doesn´t look complicated and from the new database perspective it isn´t. Here ProgrammeId relates to Programme Table, from there it is connected to the Customer, and from CustomerId it goes down to the CustomerNumber. That part is easy to follow and connect with .NET core code. What was more of a challenge was to find these entities in the old database. There is no code from the old project to look at, if I had that it would probably been easier to find where in the database they existed. Few tables have the same names or structure, and in this case, I have already migrated the customer and the programme but not the class names. This means that the code had to be connected back and fort between the databases to be able to be approved in the API POST request.

There was many of similar things coming up while building the connections. This is a level above my knowledge, and it was many things to learn along the way. The said follow up meetings was something I was waiting for to get those time consuming, question marks explained a bit. To study this closer and to kind of struggle sometimes was there and then mind wrecking but the outcome of doing it and learn is another step in improving understanding.

For the C# code and API-requests the help I got from the other developers got me to learn some useful things. With the education we got in school the knowledge is there. But here it was clear where the topics I needed to study more was. It is such inspiration to get the ability to learn new things from people with many years’ knowledge. Their perspective and inputs gave new ways to think and ability to become more effective in writing the code.

To go thru this database taught me lots about how to write and think around SQL in a deeper level. Here I spent some of the time going thru an online course to learn more than just the basics. It is always useful to know and update the past knowledge. For this company they use SQL databases for both their products which means it is something one comes across while developing in their systems. Therefor it felt good to invest time in the subject for the future.