Scenario

Given scenario

We have just been hired by this company to join the developer team. The product is developed by the company, and they are immensely proud of their creation, especially the lead developer, the product was his idea, and he is now our boss.

We got the information that they follow Scrum but there is no Scrum master at the moment. The team as created and set priority to the backlog, but it is missing clear documents of criteria and design ideas.

The developers have run plenty of unit tests and are sure there would be no bugs. They are less sure about opinions about user experience, and they have a concern that their competitors have a better and more user-friendly product. (Sidenote that they have not done any research about this concern thought).

The purpose of the product is to give the user the impression that it is easy to use and know how to use it by instinct. This will make the product attractive for the users and they will buy the product.

To create this user-friendly experience, they want to make the site accessible, the thought is to include all groups of accessibility, but for now the priority is towards visual and keyboard-only accessibility.

From the given scenario above we got the assignment to do the following:

- 3 Bullet points questions. To whom and why

- Describe your analysis of the project environment, what will affect the testing based on the scenario

- Describe your analysis of the product. It should be based upon the real product and the prerequisites from the scenario

- Analysis of the quality criteria’s. It should be based upon the real product and the prerequisites from the scenario

- Your conclusion. How should we test the product

- Write three test cases. Document oracle and perspective of what is needed to be evaluated, how the conclusion ended up with this choice.

Questions

3 Bullet points questions. To whom and why.

To the developers:

- What is the accessibility and/or the usability criteria/matrix for testing the product? Do we have a copy of the document?

- Has there been any accessibility testing done? Automated or manual? If, what was tested? And when? At the design stage, during the implementation process, or later after it has been developed?

- This we ask to get what knowledge the developers have about accessibility and how to implement it. Also, to know if there is anything that have been evaluated specifically for accessibility (not just usability)

To the management, product owner:

- How trained is the entire team in accessibility? (Management, designers, developers)

- When in the development process is the design, usability, accessibility included?

- Here we want to see if the category is a priority from start (planning, budget), during development or in the end right before product/feature release.

- Do we have documented data from users? Their experience, logged usage, error events.

- How exactly may accessibility feedback and problems be reported?

- Here we want to see if there is anything specific the users experience as a barrier, something that makes them unable or having difficulties to use the product.

Project environment analysis

What will affect the testing? Knowledge, implementation, documentation, workflow.

Knowledge and Workflow

Management:

- How well is the overall knowledge of the product

- Measure accessibility knowledge level in the management.

- Is usability and/or accessibility included in the planning process

- Is it included in the product budget

Based upon the answers from product owner the team, including all the work pipeline, there has been no specific training to create an understanding about accessibility. Most people have little knowledge of usability and more so of accessibility. It has not been and is not a priority and not given any attention in the planning process. The company knows about accessibility but limited to adjusting designs for colorblindness and common media player features.

Developer team:

- The overall knowledge of the product and its features

- Workflow within the team, roles, tasks

- Knowledge and acknowledgement about accessibility

- Backlog setup

The team is the creators and the ones who produced the product idea. They have been working close together on the product and from the scenario we can see that they are proud of the result. There doesn´t seem to be any specific structure in the Scrum process. The knowledge in accessibility is slightly higher in this team, they are aware of the topic and know to give attention to basic features. Although it is limited to visible features.

Documentation

Management:

- Check for documents from customer service, to analyze if there is feedback, critique from users.

- Requirement’s specification.

- Design documentation, flowcharts, wireframes.

The documentation is unclear, the management does not have any clear requirement documentation. Neither is there more than a few wireframes, no flowcharts, or other function specifications.

There has not been any in-person interaction with users for feedback. .

Developers:

- Event logs from the latest unit tests

- Current change logs of source code

The team has good knowledge of the product and its features, but nothing has been documented. There are unit tests for most functions.

Product Analysis

Analysis based on given scenario and the product itself

- What is the product´s main function?

- Not premium in user options

- Discover

- Like and follow podcasts

- Search

- Stream and download non-premium podcasts

- Includes advertisements

- Premium in user options

- Discover

- Like and follow podcasts

- Stream and download all podcasts

- Ad-free

- Access to comedy albums

- Monthly giveaways

Data from Stitchers Podcasting report 2020:

Favorited shows will automatically download new episodes.

350,000 podcasts have been published since 2010. Reported or scripted shows tend to be shorter than chat-based shows.

Binge listening is up to 34 million hours 2019.

Read the report here: Link to Stitcher podcasting report

- Check following categories for accessibility levels:

- Automated testing

- Content changes

- Custom controls

- Form Errors

- Images

- Keyboard and Touchscreen Navigation

- Readability

- Semantics and Structure

- Tables

- Timing

Quality criteria’s analysis

Analysis based upon given scenario and the real product. Criteria for WCAG A & AA in visual and keyboard-only.

- What are the current requirements

- Check if it is following the four main web accessibility principles

- Perceivable

- Operable

- Understandable

- Robust

Test Design

My conclusion of the analysis and choices of test techniques. Profound description of all selected techniques.

Conclusion

Project environment

Management: The management and business has not included accessibility in the budget from start but since there is a worry about competitors’ products having better usability there is an openness to make changes. Here should be mentioned the importance that the entire management have knowledge and gets training in accessibility. This will create an understanding of changes that needs to be done. But also show the benefits of making the product accessible, we should include data of better overall user experience, risk management, with existing and upcoming laws it can cause lawsuits if the product isn´t following the standards. The work needs to be updated just like all other features/code in a product but will take less time and effort after some time. It is worth to be included and highlighted in the budget for design, development, and test.

Developer team: The team is close and used to work with what they want. Without any scrum master we can see that there is no said structure more than follow the backlog. The pride of the product is high and might be that the team don´t desire changes in the workflow and feel that guidelines is not necessary. This can create reluctance when we point out necessary changes for better accessibility. We should find the categories that can be subjects for automated testing and which needs manual testing. Give the developers a guideline to include this in their backlog and work. Communication with the developers will be important, to not cause a hostile work environment, we isn´t criticize their work or the product itself.

Product

Version: This analysis is for the Web application.

Function: Service to listen to podcasts, Free and premium versions. Podcast by selected categories.

Main categories:

- Front Page, features added and recommended shows

- Search, search and browse podcasts and shows

- My podcast tab, access favorites, account settings, preferences for download, playback, location.

- Premium tab, for premium subscribers, access to exclusive content

First analysis of the web application, done by running lighthouse Chrome extension:

- No automated testing

- Tabbing feature not highlighted and ignoring functions

- Custom controls and widgets missing name and roles

- Form Errors is detected and associated with their form element.

- Images and links missing alternative texts

- Navigation focus missing, cannot use keyboard only.

- Reading order is in logical order but not separated to individual functions

- Structure, information, and relationships cannot be programmatically determined.

- No grid layouts found

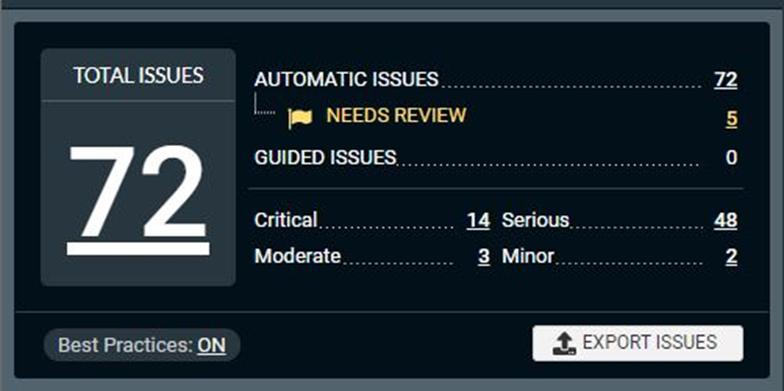

And result from running a full site check by the Automation tool Axe DevTools:

Overall, no functions are complete to work for vision accessibility or keyboard-only users.

QualityCriteria

Current requirements are:

- Usability: Easy to understand, to be operated, and to access.

- Charisma: Appealing to make the customers subscribe to premium.

- Capability: the product should be function as intended for all users.

Here the four main web accessibility principles were used to see if the product follow the quality criteria.

The missing parts of the various categories is mentioned in the Product Analysis section.

A user cannot access content if they don´t know what is on the page. With missing alternative texts etc., a screen reader user cannot be informed, three-dimensional Braille can not be converted.

Navigation and dynamic or interactive components is not functional for all devices and keyboard-only users will not be able to navigate the product. Neither search, dropdown menus, media player functions or selection of links is available.

The product is understandable and have a consistency across the site. There is instructions, hints, and form field constraints available.

The missing markup language and user interface components (such as Name, Role, Value) gives a problem for screen readers. Standard markup language should be used.

Test Techniques

Tests should be written during the planning phase and follow accessibility requirements, but to add testing to the product in current situation we need three categories:

- Automated tests – comprehensive automated scans of all digital assets

- Manual tests – representative expert manual assessments against the appropriate digital standard.

- Usability tests for accessibility methodology – hands-on usability testing by real users with disabilities for critical paths/core functions.

1- Automated testing:

- Unit testing: Test in browser during development. Run Axe DevTools Browser extension to find issues directly in development of the Unit tests. Integrate axe DevTools Enterprise into CI pipeline to run automatically after every commit.

- Integration testing: Integrate axe DevTools Enterprise into CI pipeline to run automatically after every commit. Run after Unit tests is confirmed to be working.

- Automated regression testing. Select the appropriate minimum set of tests needed.

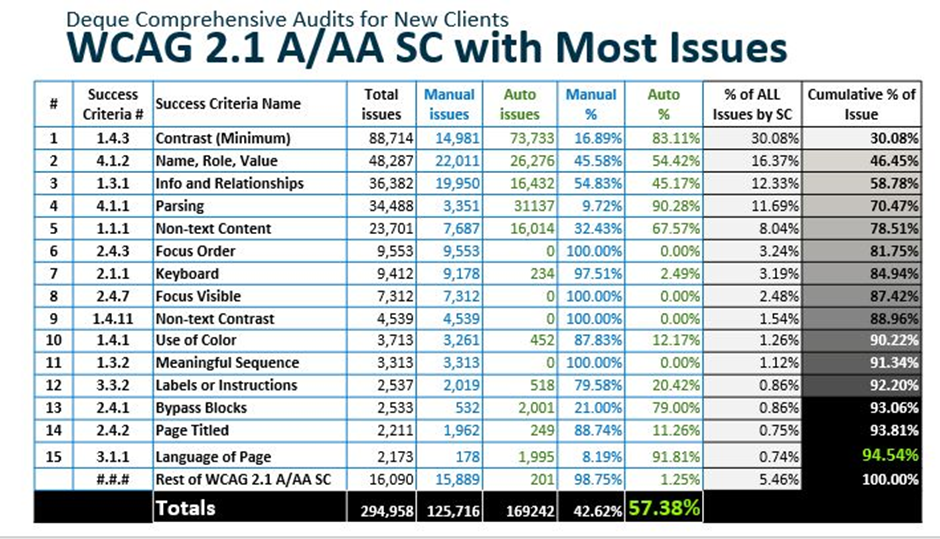

My choice of testing tool is Axe, Axe-core is an open-source JavaScript library, it tests HTML-based UI´s, it is based on a couple of different rules, WCAG 2.0-1 and level A & AA. But the difference from most other accessibility testing tools it also includes other rules, like the most common accessibility practices in different HTML-tags.

Deque which is the company who has built Axe has a couple of different tools built based on Axe-core library, that we can use to evaluate accessibility. We have axe DevTools Free and Pro, the free version is a browser extension that we can use with Chrome, Edge and Firefox. We can also use DevTools Pro/Enterprise and write automation tests, unit tests. It comes with the ability for several different integrations like Selenium WebdriverJS. Axe runs a series of tests to check accessibility of both content and functionality on a website, although it only supports open shadow DOM. The extension covers a lot of issues and is a good and fast tool. If we look at this newly released report, we can see that automation can do a lot of work for us here:

According to this report automated testing identifies 57 percent of digital accessibility issues. Read the full report here: Link to The Automated Accessibility Coverage Report

2- Manual testing: Go thru the issues presented in the Automated tests manually to identify problem areas. Also do manual testing independent from the automated tests. Follow the recommended accessibility routine when writing test cases:

Use Function testing, Input: Keyboard, Input: Touch, Visual Presentation, Alternative Text, Audio and Video, Semantics and Document Structure, Forms, Dynamic Content, Custom Widgets.

3- Usability testing: Moderate Usability testing – Live virtual testing. Collect data manually by taking notes and observing test subject and ending with a general discussion for the test subjects to share thoughts. Include: – blind and low vision: Screen reader user and screen magnifier user. – tactile/motor: User who uses keyboard only or assistive technology with same purpose as keyboard and, user with tremors.

Test Cases

3 test cases of the product. Document oracle and perspective of what is needed to be evaluated, how the conclusion ended up with this choice.

Test Suites

Using the main categories mentioned in the product analysis above for test suites to categories the test cases:

- Front Page, features added and recommended shows

- Search, search and browse podcasts and shows

- Login

- My podcast tab, access favorites, account settings, preferences for download, playback, location.

- Premium tab, for premium subscribers, access to exclusive content

- Regression Suite (Set of test scenarios from each level of testing).

Task based usability test cases

- Register as a new user

- Log in

- Browse podcast from discover dropdown menu option

- Select one podcast

- Play selected podcast

Goal is to determine if the main functions can be successfully used by people with vision and tactile disabilities.

The test subject will be observed when performing simple main tasks. Verbally lead users through the questions, let them think out loud, ask questions about their thoughts during process and decisions. Do not lead the questions, take in-depth notes.

Assistive technology used: NVDA screen reader, screen magnifier for vision. Keyboard-only for tactile.

Operating system: Windows, Mac

Browser: Chrome for windows, Safari for Mac

NVDA is selected for the reason: it is one of the most common screen readers, has the tool speech viewer, it allows us to view screen reader output and identify errors.

- Observe known click stream for each test case.

- If user didn´t follow known path, what did they click on instead?

- Was there any accessibility barriers or other issues discovered?

- Rate difficulties on a scale to document priority level.

Example, Register new user, screen reader:

Activate NVDA reader. Browser Safari on Mac.

Observe click stream:

- Register link in main menu

- Create account button

- Complete form field

- Sign up button

- Logged in page loads

Example: Search, keyboard only.

Enable system-wide keyboard accessibility.

Enable Safari keyboard accessibility

- – Place cursor into address bar

- – Keyboard only, tab navigate to find search bar

- – Tab one step further

- – Navigate back to search bar

- – Type search for a podcast

- – Tab down and highlight one podcast in the list

- – Tab to select this podcast

- – Selected podcast´s page should load

Example: Login Page, keyboard only.

Enable system-wide keyboard accessibility.

Enable Safari keyboard accessibility

- Tab to login/register menu option

- Click to select

- Modal window opens

- Tab to click on login

- Verify that cursor by default lands on username text box

- Type in username

- Tab to next form field

- Verify that cursor lands in password section

- Check if password is hidden while typing

- Verify that navigation function by tab back and forth between form fields

- Tab so the login button shows up as selected

- Press login button

- Logged in front page should load

Test cases and techniques selected based upon documents (oracle) from Deque and W3.

Link to W3 website on the topic

Link to Deque University’s Course

The three test cases are core functions for a user to be able to navigate and use the product. To tab thru and select options with keyboard only in both the main page and the modal window that opens for login/register.

Screen readers needs to be able to notify user that a modal window has opened, and view has changed.