Part 2 of 2

This text includes different areas of non-functional testing and a specific video service product is used as a focus object. I won´t go in to details about the research done on this service but I have gone thru their documentation both from developer backlogs, technical manuals and policies to gain a better understanding of the product. You can read more about the video service here: Link to video service

Part 1 can be found here: Link to part 1

This second part includes the following requirements:

- Create at least one test case for each area of non-functional testing:

- Load Test

- Stress Test

- Usability Test

- Accessibility Test

- Security Test

- Penetration Test

- All test cases should be as detailed as possible

- Explain what information we are aiming for

- Why this is of interest

- No test results

- No detailed script-based test cases

- Create at least one test case for minimum of four non-functional test areas

- Not included in the list above

- Description of why these tests should be done and why they could be of interest

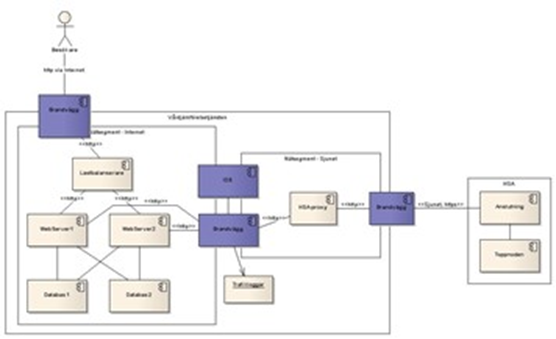

Background System Data

Since the real System architecture document is security classified, we can´t access the specifics, but there is a SAD template available with an image:

So, we could assume that there is several webservers and databases to secure the performance load.

There is not much data available specifically for the video service, so to have some sort of test data, generic templates, and statistics from the infrastructure as whole is used. The data is probably highly overcalculated for a real case scenario since the system includes several of different services. Below follows some of the data that will be used in the test cases.

Connected to E-services(2020): 7646 organizations. 7 counties are missing in the list, but this list includes all E-services.

Connected to Video Service(2020): 120 communes. Since there is no specific documentation for Video Service, let´s think that the number of connected to E-services adds up to a close enough test level.

Authentication calls (2020) Here we have the statistics for a service called National service platform. I added this data only since it was the only one I found on this request and, to show amount of HTTP calls made for authentication, even if this is not the same service it gives us an idea of which levels going thru these types of systems. All calls made to the authentication service, HTTP-request to GetCredintialsForPersonIncludingProtectedPerson:

- 4 953 175 calls made in year 2020.

- Highest in a weekday: 1 063 477.

- Highest in one hour 613 815.

The other full login statistic found was this:

- TempUser(citizen) Highest login a month: 14 038 074

- TempUser(citizen) Total accounts: 292 507

- Client, Highest login a month: 1 940 264

- Clients. Highest login an hour: 1 008 579 (8am)

- Clients. Highest login on a weekday: 1 667 948 (Monday)

A sidenote here: there are notifications documented that this statistic might not be correct. The statement does not tell how the metrics is incorrect so I will still use the ones I found in the search.

Request time is about 102 ms for the same get request as above.

As said above, couldn´t find the specific data for the Video service but we have the template that was used for the service. The numbers below are from the document template generic IFK requirements.

Technical access:

- Clients/Users: 99.95% equals maximum of 22 minutes unplanned down time a month.

- TempUsers(citizens) weekdays 8am – 6pm, 99.5% equals about 1-hour unplanned down time a month.

- Planned service has a maximum of 2 hours a month.

HTTP-request time:

- Navigation and browsing:

- Maximum of 2 seconds, includes browser rendering time.

- Must reach this level 99% of the requests.

- E-services:

- Maximum of 4 seconds.

- 99% level of all requests.

Capacity requirements:

- E-services:

- Each service needs to be able to manage 2.000.000 site transactions a month.

- Maximum load of 10 site transactions a second.

- With maximum load the system should have a maximum request time of 12 seconds.

- With loads up to 5 site transactions a second it should be a maximum request time of 4 seconds.

- Usability:

- Needs to reach acceptance criteria for Usability.

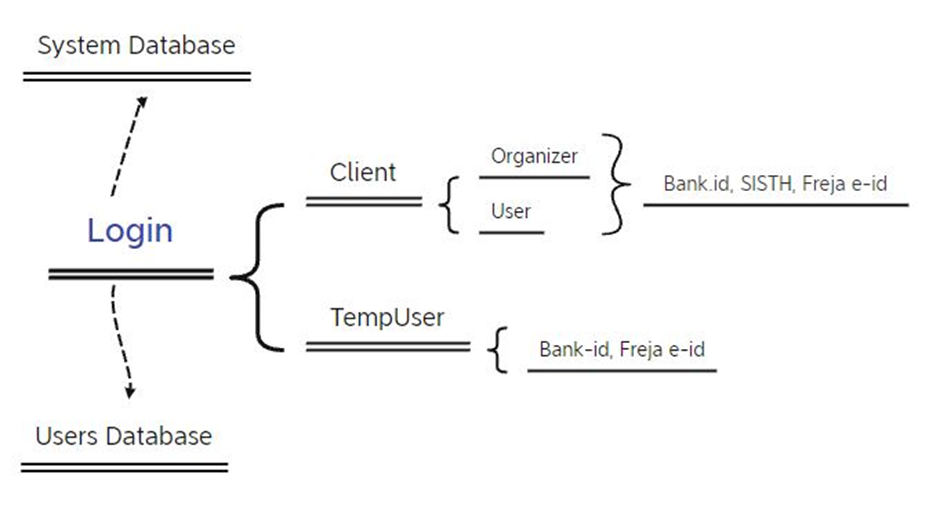

Login Form

The login function is one of the features that all users will need to go to thru to be able to use the Video Service. Let´s say it looks something like this:

A side note: TempUser equals the user outside of the organizations that have bought this service. Like a person invited to attend a video meeting with a doctor.

Client equals all organizations that have bought and have access to this service in their system. Organizer is the person that have created the meeting.

This form is connected to several high-risk functions like the Client database, System database(let´s assume there is different ones here for meeting data storage and such) third-party API, Authorization, Authentication, and also usability, accessibility critical area.

Test Cases

Definitions:

Scope: What feature to test.

Test Objectives: What to measure. High-level Description.

Approach: How to measure.

Target Goals: Acceptance criteria.

Tools: Tools used.

Load Test

- Scope:

- Login page

- Load test on the login process from link to lobby.

- Normal usage for a weekday

- Scale login processes up and down from a normal weekday usage

- Duration 3 hours.

- Test Objectives:

- Evaluate Video Service´s login page with requirement level of users

- Users includes both Clients and TempUsers

- Third-party e-identification services includes SISTH, Bank-id, Freja e-id.

- Test Approach:

- Normal weekday login load

- 70% Logged in Clients (SISTH login)

- 30% Logged in TempUsers

- Ramp up gradually to maximum load for 5 minutes.

- Run maximum for 1 hours.

- Ramp down users for 5 minutes.

- End load test

- Target

- Performance of system on maximum load

- 99% of the login response time needs to be below 4 seconds

- Interaction with third-party services

- Simulated load: Maximum: Clients: 706005, TempUsers: 184144

- Tools

- LoadRunner

Stress Test

- Scope:

- Login page

- Stress test on the login process from link to lobby.

- Ramp up maximum weekday and hold the level

- Test Objectives

- Evaluate Video Service´s login page with over the requirement level of users

- Users includes both Clients and TempUsers

- Third-party e-identification services includes SISTH, Bank-id, Freja e-id.

- Approach

- 70% Logged in Clients (SISTH login)

- 30% Logged in TempUsers

- Ramp up from 0 to normal weekday hourly login

- Ramp up to total weekday level of users

- Keep level above normal for 1 hour

- Fast scale down/log out users within 2 minutes

- Target Goal

- Performance on level over maximum

- Breaking point

- Interaction with third-party services.

- Double maximum level during test: total: 1780298.

- Tools

- LoadRunner (since we use it for Load Test)

Other Stress Test Ideas for Video Service:

- Memory shortage if one or more servers goes down, failed connections, processor behavior, recovery.

- Identification options unavailable (only one works)

- Upload large number of files to chat in video service

- Switch screen sharing between users outside of reasonable speed

- Video activation feature, on/off.

- Invited international user level (third-party app connection)

Usability Test

- Scope:

- Login page

- Use a Heuristic methods to evaluate: Learnability, Efficiency, Error, Satisfaction

- Inspection-based evaluation with 2 Heuristics testers

- Test Objectives

- Selection of e-identification method, GDPR agreement, User support

- Approach

- Use Google Chrome browser

- Use available link to reach correct meeting

- Connect to customer service for help with login

- Authenticate thru BankID Mobile application

- Agree to GDPR agreement

- Cancel and leave the lobby

- Target Goal

- Visibility: User should see the login options. Find support.

- Consistency: System should be consistent in design, same types of buttons, links, forms.

- Familiarity: Language and image should be familiar and easy to understand their purpose.

- Navigation: The system should be easy to navigate thru, select and go back and forth in menus.

- Feedback: All actions made by user should be visible to understand that it was activated.

- Constraints: Limits for error handling. The system should constrain risk of login failure.

- Recovery: Allow users to correct mistakes, go back to previous page.

- Charisma: The design should give a good impression.

- Tools

- Evaluation document.

Other Usability Test Ideas for Video Service:

- Monitored User test

- Unmonitored User test

- Task time measurement, Time to login, to let people into meeting from lobby

- Async task-based test of critical interacting incidents (like logout by mistake, wrong identification service selection).

Accessibility Test

- Scope:

- Login page

- Screen reader compatible

- Evaluate if the page is programmatically determined

- Test Objectives

- Labels, Instructions, Error prevention, Form validation.

- Approach

- Activate NVDA screen reader for listening test

- Search for page title

- Use keyboard-only to follow the semantic labels

- Tab up/down authentication options

- Select SISTH identification option

- Select Bank-id identification option

- Select Freja e-id option

- Tab into/out of form field

- Fill in correct social identification number

- Accept GDPR Agreement

- Do not accept GDPR Agreement

- Target Goals

- All content must be text presented in a logical order

- All functionalities must be available thru tabbing

- All form labels, instructions for input must be programmatically associated with the element

- User must receive immediate feedback after all actions

- Both Error and Success feedback must be made available immediately

- Tools

- NVDA screen reader

- Axe DevTools extension may be used to identify problem areas.

Other Accessibility Test Ideas for Video Service:

- Mobile: Click action on all features

- Mobile: Pinch to zoom start mode (should be deactivated)

- Contrast guidelines (use a browser extension for best testing)

- Sign language interpretation (said to be available for video service)

- AI based live-captions (see test case further ahead in this document)

- Live transcript should be available (same reason as stated in test case below)

- Keyboard-only, tab highlight on all selectable option

- Session timeout warnings

- Help features, visible help/information source option

Security Test

- Scope:

- Login page

- Secure response when login attempt fails

- Verify authorization and authentication

- Test Objectives

- TempUser (citizen)

- Login credentials, session time duration.

- Approach

- Enter invitation link

- Select BankID identification method

- Enter credentials

- Accept GDPR agreement

- Verify TempUser only access

- Use backwards arrow to go back to login page

- Verify that user is still logged in

- Log out

- Target Goal

- Verify invitation link connection to correct meeting

- Verify TempUser not given Client or Organizer access

- Verify activated session time duration.

- Tools

- Manually tested

Other Security Test Ideas for Video Service:

- Invitation link activation time

- Source code availability in web browser (DevTools, inspect)

- Session timeout to administration pages (not accessible to reload, back-tab)

- Cookie encryption (not showing SISTH-id numbers)

Penetration Test 1

- Scope:

- Login page

- Attempt to modify SISTH login

- Intercept the client HTTP request ad change value

- Test Objectives

- Client user, Login credentials, authorization control.

- Approach

- Follow a clients SISTH login

- Search for SQL system file for encrypted credentials

- If found analyze and change SQL query

- Attempt to decrypt data and change authorization credentials

- Target Goal

- Attempt to find vulnerabilities

- Verify that risk data is hidden within the internal system

- Ensure decryption is not possible.

- Tools

- Burp Suite

Localization Test

- Scope:

- Login page

- Verify that localization is available

- Android English Localization

- Test Objectives

- Mobile Application, system: android

- Change language to English

- Approach

- Enable psudeoLocales function in the code

- Build and run the application

- In settings tap Language and input

- Select Language preferences and move English(XA) to the top of the list

- Verify that Login page language changes to English

- Verify layout

- Target Goal

- Layout of the login form should adjust depending on localization

- Language should change to English, or give option to change

- Login features should be presented according to requirement criteria

- Verify if broken hard-coded strings is visible.

- Bi-directional text problems

- Tools

- Code IDE. Mobile test emulator.

Other Localization Test Ideas for Video Service:

- Date and Time format

- Time zones

- Web application Content Language verification

- Application compatibility

It is mentioned that the service is available in Swedish and English. It is possible to connect with International Organizations, therefor important to make sure Video Service can adjust locale features.

Compatibility Test

- Scope:

- Login page

- Verify that application runs on required android versions

- Test Objectives

- Login page on android devices

- Evaluate backwards compatibility

- Approach

- Download application in app store

- Click on meeting link

- Start with Android 10 change version to Android 5.1

- Select open digital meeting

- Select Freja e.id

- Enter login credentials

- Accept GDPR agreement

- Verify that video service activates

- Target Goal

- Android mobile app

- Verify backward compatibility

- Verify login capability on different android versions

- Target Goal

- Android mobile app

- Verify backward compatibility

- Verify login capability on different android versions

Other Compatibility Test Ideas for Video Service:

- Different operating system

- Different browsers

- Forward compatibility

- Network bandwidth

- Network capacity

Video Service

Penetration Test 2

- Scope:

- Video Service

- Social Engineering, Phishing Attack

- File sharing

- Test Objectives

- File sharing scan, Video meeting chat

- Approach

- Start meeting with two SISTH-id users

- Share link/downloadable content in chat

- Meeting organizer attempt to download or follow link

- Verify that content posted is scanned for malware

- Target Goal

- Attempt to find vulnerabilities

- Verify that phishing attacks in video meeting is detectable

- Penetration attack prevention

- Tools

- Manually

Other Penetration Test Ideas for Video Service:

- Locked login page after certain unsuccessful attempts

- Evaluate HTTP request visibility for all type of users

- Cookie format

- Visible error message text (all features, login, waiting room, video service, meeting creation)

- Logged in access time during inactivity.

- Access permissions for log files

Volume Test

- Scope:

- Video Service meeting planning

- Database capacity

- Verify data-loss prevention

- Test Objectives

- Video meeting memory storage, Database volume capacity

- Approach

- Login as Meeting organizer with SISTH-id

- Create over maximum level of meetings

- Split meetings: 80% client to user, 20% Client to TempUser

- Inspect database memory for created meetings

- Compare database columns with unaffected database

- Target Goal

- Video meeting database storage capacity

- Verify maximum storage

- Ensure data loss is negative

- Verify if the system crashes

- Tools

- Depends on database used, this information is for obvious reasons not provided in the product documents. Statement in their product document: “… Operation-related information must be kept at a conceptual block level, without reference to concrete products…”. But would otherwise recommend DbFit, supports most SQL databases.

Other Volume Test Ideas for Video Service:

- System alerts if maximum volume is reached

- Third-party service behavior

- File upload time

- Add .csv file of users

- Meeting cancellation

Spike Test

- Scope:

- Video Service Multi-party meeting

- Spike in video meeting attendees

- Detect system limitations during video meeting

- Test Objectives

- Video meeting, Capacity over required level

- Approach

- Login as Meeting organizer with SISTH-id

- Invite and add 5 SISTH users from internal network

- Add another 200 users

- Run meeting for 1 hour

- Logout all attendees

- Target Goal

- Video meeting resource capacity

- Verify maximum spike level

- Evaluate recovery behavior

- Tools

- I would like to think that the system is cloud-based, so we can use Lighthouse-ci.

Other Spike Test Ideas for Video Service:

- SISTH login verification (start of workday, emergency red alert)

Endurance Test

- Scope:

- Video Service Multi-party meeting

- Endurance on active long time video meeting

- Verify data-loss prevention

- Detect memory leaks

- Test Objectives

- Video meeting database, Video meeting time capacity

- Approach

- Login as Meeting organizer with SISTH-id

- Invite and add 5 SISTH users from internal network

- All attendees should have activated camera

- Activate and post in the chat section

- Activate screen sharing

- Run meeting for 8 hours

- Log out all attendees

- Target Goal

- Video meeting resource capacity

- Verify maximum endurance level

- Ensure memory leaks is negative

- Tools

- I would like to think that the system is cloud-based, so we can use Kubernetes with some PaaS

Other Endurance Test Ideas for Video Service:

- Normal response time for loading video service

- Response time for continually change settings during meeting

- Behavior when adding/logging out users regularly

- Third-party service connection behavior, closure(like skype)

- Mobile application response time during long sessions.

- Memory consumption

Baseline Test

- Scope:

- Video Service Multi-party meeting

- Traceability of user behavior

- Verify encrypted data

- Detect vulnerabilities

- Test Objectives

- Video meeting database, Video meeting logfiles.

- Approach

- Login as Meeting organizer with SISTH-id

- Invite and add 5 SISTH users with SISTH access.

- Activate and share a document in the chat section

- Log out all attendees

- Search the security service for logfiles

- Verify encrypted data

- Verify size of log data.

- Target Goal

- Video baseline from traceability template

- All system usage from users and admins must be encrypted

- Data storage should be on a “reasonable level”

- Logfiles needs to be protected from unauthorized access.

- Tools

- JMeter. Maybe Burp Suite as next step if logfiles is too easily reached.

Other Baseline Test Ideas for Video Service:

- Response time, Navigation

- Response time, login

- Response time, third-party applications

- Navigation capacity, normal level

- Navigation capacity, top load level

- Authentication

- Traceability, what information from user behavior is available on the website.

(For internal system, which we can assume includes searching/adding video meetings: 20 sideview/transactions a second, max response time 6 seconds. At 10 sideview/transactions a second, max response time 2 seconds) All information for the ideas can be found in the different public documents and templates of Video service.

Accessibility Test

- Scope:

- Video Service Multi-party meeting

- Captions and Transcripts

- Verify Hearing Accessibility

- Test Objectives

- Video meeting, multi-party service, Text synchronization. .

- Approach

- Click on invitation link

- Verify user with e-identification

- Attend video meeting

- Find settings, turn on live caption

- Verify accessible synchronized captions.

- Target Goal

- Video meeting accessibility for hearing disabilities

- All live multimedia events that contain dialog and/or narration must be accompanied by synchronized captions.

- Captions should be verbatim for unscripted and live content.

- When speech is inaudible to perceive clearly, captions should say so.

- Tools

- Manually testing.

I know that this test case is not possible on the video service as of today. Live captions are not mentioned on any of the video services and adding this one for exactly that reason. This is a service, targeting public and professionals around healthcare. With no access to captions the service exclude a significant percentage of people, no matter if it is two party or multi-party meeting.

Load Test

- Scope:

- Video meeting, multi-party service

- Load test on active video meetings.

- Normal usage for a weekday

- Duration 1 hour

- Test Objectives:

- Evaluate Video Service with active meetings, with requirement level of users

- Users includes SISTH-id access

- Test Approach:

- Normal weekday load

- 60% meeting with two users

- 20% meetings with 7 users

- 20% meetings with 10+ users

- Ramp up gradually to maximum load for 1 minute

- Run maximum for 1 hours.

- Ramp down users for 1 minute.

- End load test

- Target Goal

- Performance of system on maximum load

- On top load response time should not be over 6 seconds

- Network connection should be set to different speed levels

- Measure performance to discover issues on maximum load.

- Tools

- Lighthouse-ci

Other Load test ideas:

- Latency/Lag time

- Video image frame rate

- Evaluate bottlenecks

- Connection time

- HLS on mobile application (which I assume is used at least for IOS)

- CPU usage

- KPI monitoring