This post “How can we adjust to Working Agile with Cloud-based Microservices in CI/CD” is my software testing degree project written during the last course of a software test engineer education. All information from the thesis is not included and some text is changed. The original document is available as a pdf Link to pdf here This is part 5 of 5.

Discussion, of analysis working agile in cloud-based environments

Next sections include a discussion of findings in the analysis, thought, some opinions and conclusions about the findings.

Difficulties, with working agile

What is the main problems companies are facing today within microservice testing when working agile in a cloud-based environment?

Not one participant gave just one area the reason to be the main problem, but most focus on non-technical areas. That the focus went in the direction of test automation or not test automation was not surprising, this is one of the most common discussions I can see among test engineers today. Although, that few participants didn´t even mention any type of technical test structure more than end-to-end testing as a problem directed me into research more about organizational structure than I had expected. The opposite could be said while researching reading and video material except from end-to-end testing. One question I get from this is if this only is opinion-based or knowledge-based to some degree? Test engineers that doesn´t come from a technical background and mostly focus on manual testing might not know what could be done with test automation. And could the focus on test automation be influenced from developers and CI/CD pressure. For readability I will continue this dividing theme in this section.

One problem that I see and would want to say is the main problem is communication. Both as said in the discussions, there is too little information developer/tester/management and between teams. When going from waterfall to agile we got a better parallel process and more independency. I do not question this, agile gives more benefits and facing less problems for the cloud-based system environment. But could the independency cause the teams to prioritize it more than teamwork outside of their own no matter chance for improvements. Test engineers is as I found in my research mostly just one in a team, it is easy to overlock that one person’s “actual” work tasks. Again, the “push to prod” statement, get the release out and deal with potential problems later. Larger business tends to do test strategies on high-level which leaves room for great planning within the teams but also leaves room for ignorance and down-prioritization.

End-to-end testing is a clear problem found, the tests being flaky, takes long time to set up, to run. Gives the conclusion that the result is not worth the effort if we can cover it in other ways. As mentioned in 6.1.3 System complexity there are lots of areas within microservices that can be problematic. When starting this research, I thought it would be easy to focus on best practice for lower-level testing and see the performance within the service when they communicate with each other. That with unit test, API test and end-to-end test it would be easy enough. The insight and knowledge along the way shows a different result. But in my opinion, it is correct to focus on insight between services. The more services, containers, databases we add the more activity we need to maintain. One conversation I had was about the problem of the software outside of specific services/containers. In that example it was a database which caused several critical issues but did not show up in the logs or issue reports. They had unit tests, test environment, regression test and end-to-end test done but this shows the importance of testing integration. I will summarize this topic with a statement from J.B Rainsberger article series on integrated tests: “You write integrated tests because you can’t write perfect unit tests. You know this problem: all your unit tests pass, but someone finds a defect anyway. Sometimes you can explain this by finding an obvious unit test you simply missed, but sometimes you can’t. In those cases, you decide you need to write an integrated test to make sure that all the production implementations you use in the broken code path now work correctly together.” (J.B Rainsberger, 2009/2021).

This leads to the problem with knowledge, testers that don´t have the knowledge of coding, microservice architecture could never set this as a priority in a test plan.

The two topics above connected in my analysis when I came to research the reason for tool sprawl and access. Today most uses PaaS of some kind, GCP, Azure, AWS. There was where I started the analysis, I investigated what type of monitoring could be done within GCP? Would it be possible to do the topics mentioned as best practice? Problems found are that both lack possibility for one or another monitoring feature. Some can be imported by a third-party tool, the problem remains, the usage of several tools for different features. And even integrate with the PaaS they can´t combine the data outcome. Teams decide themselves what tools to use, with probably the PaaS as common connection to the rest of the organization. And of course, teams have their own preferences what they like to work with. With these facts it is easy to see the cause of tool sprawl.

Approaches, for cloud-based microservices

What could be a best practice approach to reach most effective testability and test coverage for a microservice environment?

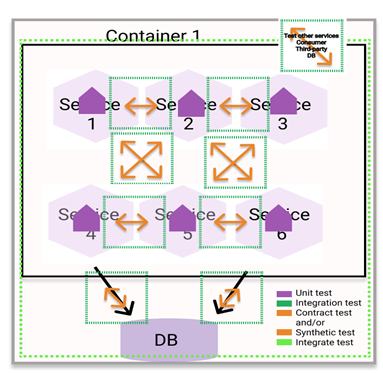

This part was the most complicated part to research and analyze, not only for the complexity. To keep the data dependable, neutral, and manageable there were times I had to make decisions in priority of research, ask myself if the decision were based upon analysis or my own past knowledge. As mentioned in section 5.5 Application coverage I used the diagram to follow the coverage. With this I took the analysis data and set into the diagram. It ended up something like this:

I do believe Unit tests are important, if not only to make sure the functions work as expected. Here we can also work on the topic of communication and knowledge. Developers who write unit tests will get more insight in testing and could gain more skills in write clean code. Clarification that I do not say that developers write bad code or lack skills, my point is that we learn and develop from our work processes. Although, Unit tests should not be implemented everywhere or be seen as a coverage for the entire service or application.

My conclusion is connected to my first topic for this research, more focus on the integrations between services. We have contract testing; it will be implemented early in development and prevent flaky testing. During the research I came upon a writing that I felt explains where my conclusion was going: “Strong integration tests, consisting of collaboration tests (clients using test doubles in place of collaborating services) and contract tests (showing that service implementations correctly behave the way clients expect) can provide the same level of confidence as integrated tests at a lower total cost of maintenance” (J.B Rainsberger, 2015)

Reading the text above it says the way clients expect and that is where we can gain stability, reliability. CDC, consumer-driven-contract testing. With mobile applications and other similar platforms, we need to focus on consumer experience. There are unit tests in backend, we add test automation in the deployment pipeline and add minor integration testing, there we have coverage of backend. With this there is ability to set up performance tests such as stress and load testing.

With CDC and API tests on consumer-side the need for test environments decreases, which means testing in production can be prioritized. Test engineers can put focus on manual testing when new releases go in production. Same for developers the risks for system crashes can be caught earlier in the process, and a release do not cause a bottleneck of bug fixes.

Alongside with a more organized shift left approach the analysis shows that monitoring is significant. This too to be implemented early in the development process by synthetic monitoring. We can cover most areas of an application with synthetic monitoring and identify issues before they reach the end-user. This is also an insight for the developers to see more of the actual behavior of the application and functions.

The problem highlighted in the research was tool sprawl and unorganized logs. During a conversation I had when analyzing the different tools and software used by a company the person said this:

“We don´t have to reinvent the wheel. We just have to find the best resources that lessen the scattering”

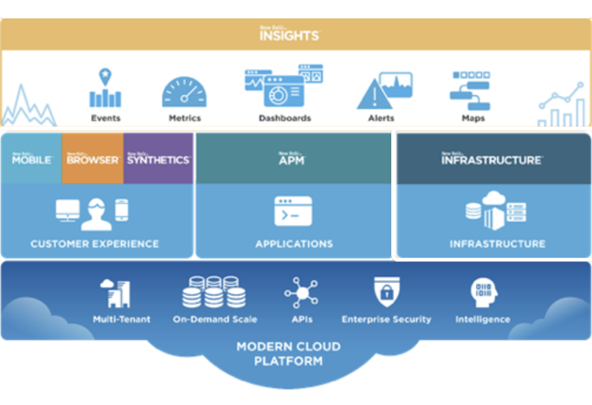

That statement pinpoints the exact problem with agile teams decide tools within the teams and having diverse needs and preferences. Together with the find above in section 7.1.1 Difficulties, most PaaS do not have the possibility to monitor all areas mentioned, not even with third-party extensions. In this research I tried to find a tool where we would be able to:

+ Integrate with PaaS

use MELT:

- Metrics

- Events

- Logs

- Traces

+ Synthetic monitoring

+ Separated API testing

Preferably without needing to use some sort of extension integration except from the PaaS, IaaS, and SaaS.

I would say that the result was low, few options have this wide inclusion in their tools. But New Relic was one who had all these features, during the time I did this research I had access to investigate the tool closer. Here we can see the infrastructure:

New Relic 2021)

Conclusion of this is that the tool would be able to do all the things that today is scattered out in different tools by the teams. Which get us into the theme of communication again. Which will be my main final part in this discussion of best practice findings in this degree project.

Communication.

Above we have gone thru:

- Unit test

- Integration tests (Provider-driven)

- CDC, Consumer-driven-Contract test

- CD Automation test

- Synthetic monitoring

- Manual test

- Distributed tracing

- System Monitoring/ Observability

Most of these can and I would go as far as saying will need the teams, developers, just as tester to communicate, to work together learn and teach each other of their area of profession, over the team borders.

I did not have any thoughts that this research would give a straight-line answer for one architecture to be the ultimate one. It is obvious that everything depends upon individual factors, such as product, company, organizational structure, software´s used and so on. There will always be room for improvements alongside of new knowledge and development, these findings should be seen as a reference part of the whole. The direction the themes found led shows that we can´t focus or ignore areas within either organization or system architecture when it comes to software testing.

Priority

What are the impressions of the priority of testing microservices/container applications among test leads?

The conclusion on this is simply: The impression is that testing is not seen as a priority for microservices. All answers except one from discussions and interviews were started with no, the other was started with “it depends”. The second answer were also explaining that it is easy to ignore testing if the changes are small and the monitoring don´t show critical issues. This I connect with the same as a no and down prioritization of testing. One theme I found and been analyzing back and forth, test engineers seem to down talk their own importance. By this I mean that in discussions about testing and team organization there seem to be a pattern where testers meet the discussions with approving to release early, take on more tasks with the argument that it will be more work, but we will handle it. Opposite from my impression with developers there the answer would be something like, this is more workload, we need more developer resources. Is this a behavior of old times, do as we always have done? In a waterfall environment with a monolithic application a smaller team of test engineers would easier handle to have full overview in tests and monitoring. But with agile split up teams and a more complex architecture I can´t ignore the thought that testers need to speak up, the management to put testing in budget priority instead or alongside that new feature. This conclusion also comes from the research material and so much focus on test automation, writing code as the ultimate answer of solving issues of all kinds.

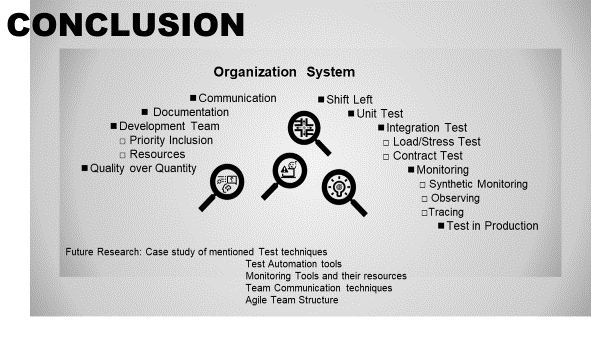

Conclusion

Two approaches are used in this study, where problem areas, best approaches, and test engineer’s role in the industry, is investigated. The research was done by

- Discussions and semi-structured interviews with test engineers about their knowledge, experience, and opinions.

- With investigating reading and video material and experimental research on some of the material found.

Below follows the conclusions from discussions and findings for each of the study´s three main questions.

What is the main problems companies are facing today within microservice testing when working agile in a cloud-based environment?

Most participants focused this topic on non-technical subjects.

Lack of documentation both of system structure to get an overview what and how to test and in data collection was a concern mentioned.

Too independent teams were reason to a variety of problems mentioned. Such as, testing being overlooked in favor of fast deployment, tool sprawl where it becomes difficult to know where to find everything needed to monitor and trace data, less knowledge sharing or collaboration for reliable testing coverage and architecture.

End-to-End or integrated tests are flaky, time-consuming and don´t give reliable results.

System complexity makes it easy to overlock critical areas or unseen problems of the application. To test for the expected and not the unexpected.

Test environments and test doubles are too different from production environment. One participant mention that there is an overconfidence in staging environment that cause unnecessary issues.

What could be a best practice approach to reach most effective testability and test coverage for a microservice environment?

Shift left, to test early gives us the possibility to avoid bottlenecks and bugs in production.

Focus on low-level testing, test within the services. Here participants mention unit test, synthetic monitoring.

Test automation in the deploy pipeline, check before going to production.

Communication between test engineers and developers, knowledge exchange and common priorities. This includes CDC, consumer-driven contract testing.

Minimize tool sprawl with software that can integrate with used PaaS and have the ability for connected dashboards with: Metrics, Events, Logs, Traces as well as synthetic monitoring.

Test in production, internal testing with real user data instead of test environment or test doubles gives an accurate view of system behavior in production.

Gain control over the testing process, as mentioned in the example in section 6.2.5 where the company changed to parallel testing and development, adding more people to the test groups instead of working with limited resources or too few test engineers in each team. . A test engineer team with organizational knowledge, development knowledge, manual, regression knowledge. And specific specialist areas as: Security, platform, accessibility and more.

Without effective communication and enough resources, it wouldn´t matter how much testing or how many techniques, or test levels we have. There will be bottlenecks and issues.

What are the impressions of the priority of testing microservices/container applications among test leads?

All answers from participants when asking if they thought testing was seen as a priority, started the same way, with a no or it depends.

For the management and test engineers’ opinions it was down prioritization of testing in favor of production that was mentioned. More importance of build, release new features than application performance.

One participant used the statement “Push to prod trap” and other gave similar answers with explanation that it is easy to overlook testing minor changes in favor of fast deployment.

Test automation seen as a replacement of other types of testing is mentioned. In answers from participants most of them sees test automation as a tool, it can help the testing process but not replace manual testing. (See explanation of the use of manual testing in section 6.3.3)

There was a pattern in one test engineer per team/service. This did not seem to change with larger systems or with deployment frequency. It was less likelihood that more test engineers were added to a team than extra developers where there was a need for more resources.

My conclusion

There is a need to focus on both system and organization.

Without effective communication and enough resources, it wouldn´t matter how much testing or how many techniques, or test levels we have. There will be bottlenecks, constrains and other issues.

That was all for this series of posts. If you read all of them I must say I´m impressed, this research ended up way larger than I thought. I will continue research this topic both the subjects mentioned and also go further with the more technical area. I always enjoy a good conversation about testing and appreciates new perspectives, so please feel free to reach out if you want to meet on a digital coffee and discuss the topic further.

Links to previous posts in this series can be found here:

Link to part 1

Link to part 2

Link to part 3

Link to part 4